Context:

Artificial Intelligence (AI) is not just a new technology—it is a transformative force that is changing how societies function, economies grow, and governments deliver services. From diagnosing diseases to predicting crop failures, AI has the potential to improve lives across sectors. But it also carries serious risks such as the spread of misinformation, data misuse, deepfakes, and threats to national security.

-

-

- India has recognised both these opportunities and challenges. The Ministry of Electronics and Information Technology (MeitY) has therefore released the AI Governance Guidelines, a comprehensive framework to promote innovation while ensuring that AI development remains safe, ethical, and accountable.

- These guidelines are guided by the vision of “AI for All”, aligned with India’s long-term goal of achieving Viksit Bharat (Developed India) by 2047. The central idea is that AI should not benefit only a few, but reach the last citizen — improving healthcare in villages, making education more accessible in local languages, and helping farmers adapt to changing weather patterns.

- India has recognised both these opportunities and challenges. The Ministry of Electronics and Information Technology (MeitY) has therefore released the AI Governance Guidelines, a comprehensive framework to promote innovation while ensuring that AI development remains safe, ethical, and accountable.

-

Vision and Guiding Principles:

India’s approach to AI is rooted in the principle of “Do No Harm”, which means promoting innovation responsibly while minimizing risks.

The framework emphasizes:

-

-

- Human-centric development: AI must serve people, not replace them.

- Inclusion and equity: Every section of society should benefit from AI advancements.

- Safety and accountability: Developers, companies, and regulators must share responsibility for preventing harm.

- Human-centric development: AI must serve people, not replace them.

-

Key Pillars of the AI Governance Framework:

The guidelines are organised around six main pillars that together form the backbone of India’s AI governance structure.

1. Infrastructure: Building the Foundations of AI:

AI requires large amounts of data and computing power. The guidelines recommend:

-

-

-

- Expanding data and computing access: Platforms like AIKosh will offer high-quality India-specific datasets and subsidised access to high-performance computing systems (GPUs) to startups, researchers, and innovators.

- Integration with Digital Public Infrastructure (DPI): AI solutions should build on India’s successful DPI systems like Aadhaar, UPI, and DigiLocker to improve service delivery at scale.

- Private sector incentives: The government will encourage AI adoption among micro, small and medium enterprises (MSMEs) through tax rebates and AI-linked loans.

- Expanding data and computing access: Platforms like AIKosh will offer high-quality India-specific datasets and subsidised access to high-performance computing systems (GPUs) to startups, researchers, and innovators.

-

-

Together, these measures aim to make AI innovation both accessible and affordable.

2. Regulation and Policy: A Light-Touch but Flexible Approach:

India has chosen not to immediately introduce a strict, standalone AI law. Instead, it will use existing laws while making targeted amendments where necessary.

-

-

-

- Existing laws in use: The IT Act (2000) and the Digital Personal Data Protection Act (2023) will serve as the main legal foundations for AI regulation.

- Targeted amendments: Updates will be made to address new issues such as content authentication, liability, and copyright.

- Deepfake and misinformation control: The government plans to require visible labelling of AI-generated content to prevent fake news and digital impersonation.

- Global cooperation: India will also work with other countries to develop international AI standards and ethical frameworks.

- Existing laws in use: The IT Act (2000) and the Digital Personal Data Protection Act (2023) will serve as the main legal foundations for AI regulation.

-

-

3. Risk Mitigation: Anticipating and Preventing Harm:

AI systems can fail or cause harm if not properly monitored. To handle this, the guidelines propose:

-

-

-

- An India-specific risk assessment framework, tailored to local realities.

- The use of voluntary codes of practice and self-regulatory mechanisms for industries.

- Techno-legal safeguards, where privacy, fairness, and transparency are built directly into system design.

- An India-specific risk assessment framework, tailored to local realities.

-

-

4. Accountability: Defining Responsibility in the AI Ecosystem:

The framework introduces a graded liability system — meaning that the level of responsibility depends on how risky or sensitive an AI application is.

Key expectations include:

-

-

-

- Grievance redressal mechanisms within organisations for users affected by AI decisions.

- Transparency reporting on how AI systems are trained, tested, and monitored.

- Self-certification by companies to confirm compliance with ethical and technical standards.

- Grievance redressal mechanisms within organisations for users affected by AI decisions.

-

-

5. Capacity Building: Empowering People and Institutions:

AI adoption will succeed only if people understand it. The guidelines stress education and training across all levels:

-

-

-

- AI literacy for citizens: Public awareness campaigns to help people understand how AI impacts their lives.

- Training for government officials and law enforcement: Helping them use AI responsibly in governance, policing, and administration.

- Upskilling programs: Expanding existing skill development schemes to include smaller towns, universities, and technical institutes.

- AI literacy for citizens: Public awareness campaigns to help people understand how AI impacts their lives.

-

-

6. Institutional Framework: Coordinating Efforts Across Government:

A “whole-of-government” model has been proposed to ensure smooth coordination and policy implementation. Three main institutions are recommended:

1. AI Governance Group (AIGG): Responsible for overall strategy, coordination, and policy decisions.

2. Technology & Policy Expert Committee (TPEC): A body of experts to provide technical and ethical guidance.

3. AI Safety Institute (AISI): A specialised organisation to research and monitor AI safety, risk management, and system reliability.

Legal and Sectoral Frameworks Supporting AI Governance:

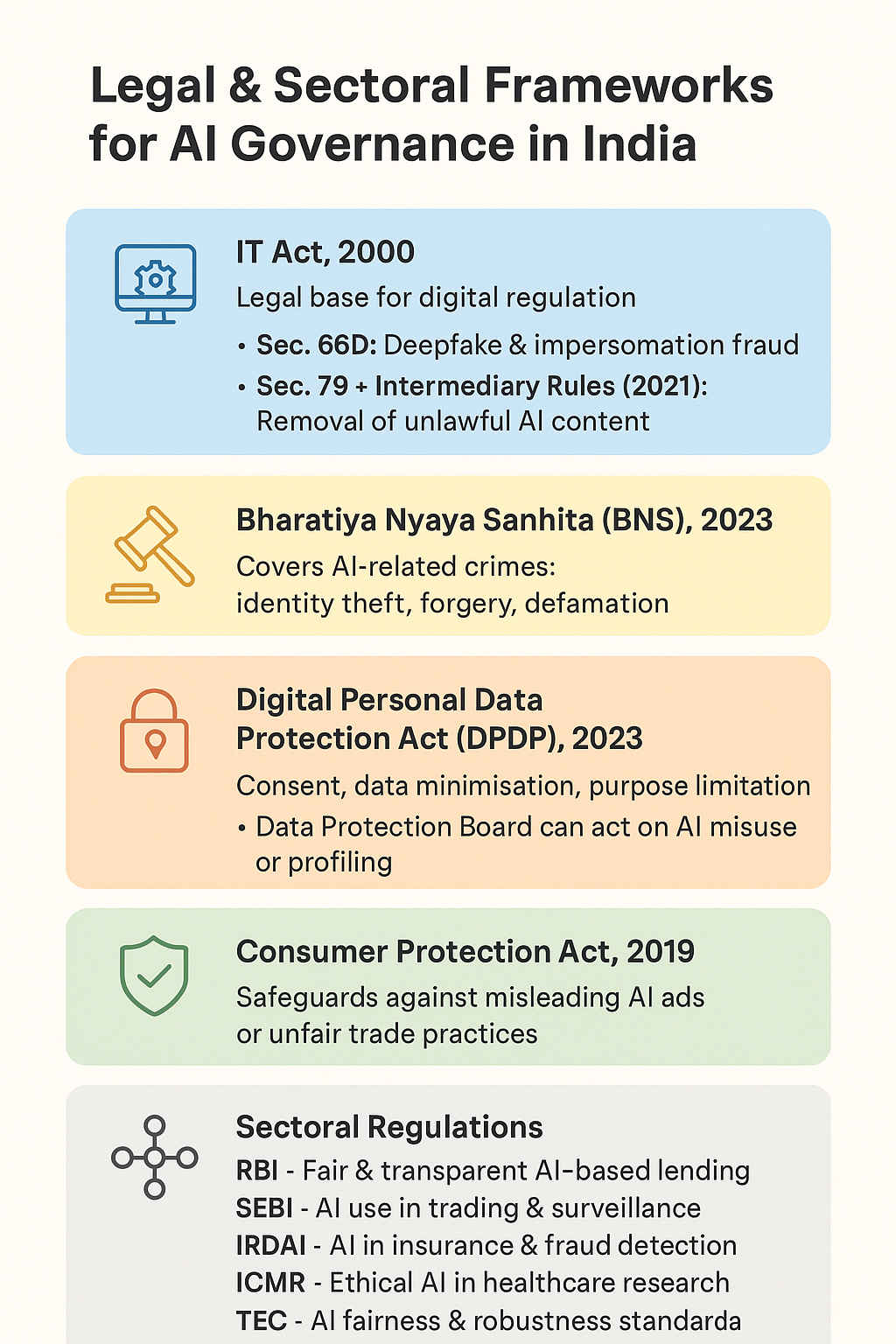

1. IT Act, 2000:

Forms the legal foundation for digital regulation in India.

-

-

-

- Section 66D: Addresses cheating through impersonation — relevant for deepfakes.

- Section 79 and 2021 Intermediary Rules: Require online platforms to remove unlawful or harmful AI-generated content.

- Section 66D: Addresses cheating through impersonation — relevant for deepfakes.

-

-

2. Bharatiya Nyaya Sanhita (BNS), 2023: Covers cybercrimes and AI-related offences such as identity theft, forgery, and defamation.

3. Digital Personal Data Protection Act (DPDP), 2023: Introduces strict rules on consent, data minimisation, and purpose limitation — directly relevant to AI model training. The Data Protection Board can take action against misuse of AI-driven profiling.

4. Consumer Protection Act, 2019: Protects consumers from misleading AI advertisements or unfair trade practices — for example, when AI tools misrepresent financial or healthcare services.

5. Sectoral Regulations

Different regulators are already integrating AI-specific safeguards into their domains:

-

-

-

- RBI: Requires fairness and transparency in AI-based credit scoring and digital lending.

- SEBI: Monitors AI use in stock trading and market surveillance.

- IRDAI: Oversees AI in insurance underwriting and fraud detection.

- ICMR: Mandates ethical use of AI in biomedical research and healthcare.

- TEC: Develops voluntary standards for fairness and robustness of AI systems in telecom networks.

- CERT-In and NCIIPC: Ensure timely reporting of cybersecurity incidents involving AI systems.

- BIS: Works with international bodies to develop Indian standards for trustworthy AI.

- RBI: Requires fairness and transparency in AI-based credit scoring and digital lending.

-

-

Emerging Concerns:

· Even as India promotes responsible AI, some concerns have been raised about government officials using foreign AI platforms.

· For instance, consequences if a bureaucrat uploads a confidential file to an AI chatbot for summarization. Issues on its data stored, analysed, or even misused by foreign companies.

· Experts worry that such practices could expose sensitive government information or allow outside entities to infer patterns about national priorities and strategies.

· Hence, discussions are underway about protecting official systems from foreign AI tools and building secure domestic alternatives for internal use.

Tackling Deepfakes and Misinformation: The government is preparing amendments to the IT Rules to make platforms responsible for labelling AI-generated content.

-

-

- Users must declare whether their content is synthetically generated (e.g., deepfakes).

- Platforms must deploy tools to verify these declarations and attach visible labels to such content.

- If they fail to comply, they could lose the legal protection they currently enjoy under Section 79 of the IT Act.

- Users must declare whether their content is synthetically generated (e.g., deepfakes).

-

Conclusion:

India’s AI Governance Guidelines represent a major milestone in shaping a responsible AI ecosystem. Instead of rushing to impose blanket restrictions, the government has chosen a balanced and practical path — encouraging innovation while safeguarding people’s rights and national interests. By combining existing legal frameworks, clear accountability systems, and capacity building, India is building a model that other developing nations can follow. As the country prepares to host the India–AI Impact Summit 2026, this framework signals India’s intent to lead the global conversation on ethical and human-centric AI — ensuring that technology serves humanity, not the other way around.

| UPSC/PSC Main Question: Discuss the need for an institutional framework for Artificial Intelligence (Al) governance in India. How can such a framework balance innovation with ethical, legal, and social accountability? |